project outcomes

We built a 0→1 product that eliminated manual Excel workflows requiring 10-15+ hours of data aggregation per assessment cycle, allowing engineers to focus on analysis instead of data wrangling.

Scaled from 1 to 5 NASA engineering teams across multiple centers since the 2021 deployment, becoming the standard platform for cross-program requirements assessment.

Won NASA Ames Honor Awards in 2021 and 2025 recognizing the platform's innovation and measurable impact on mission-critical workflows.

01

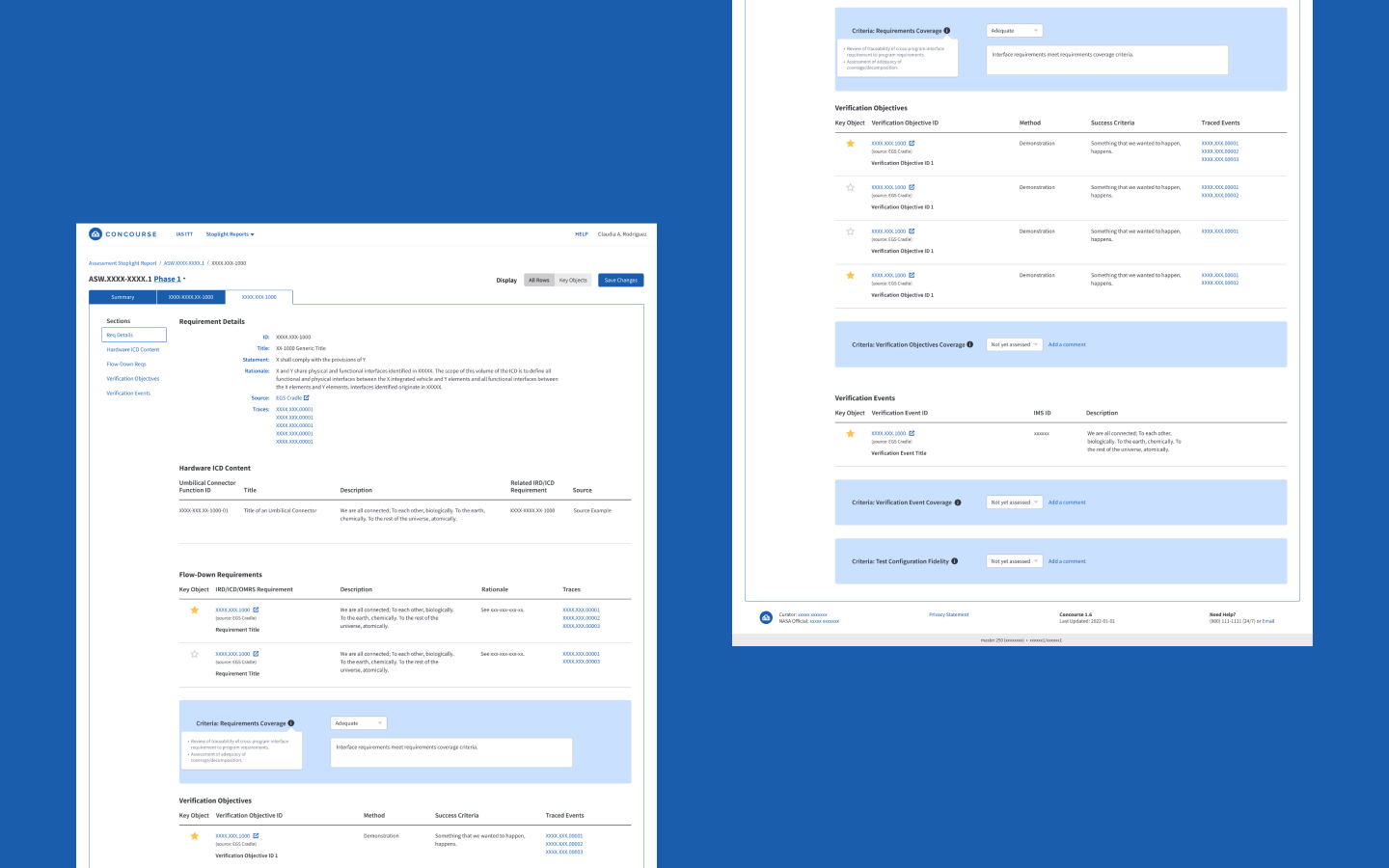

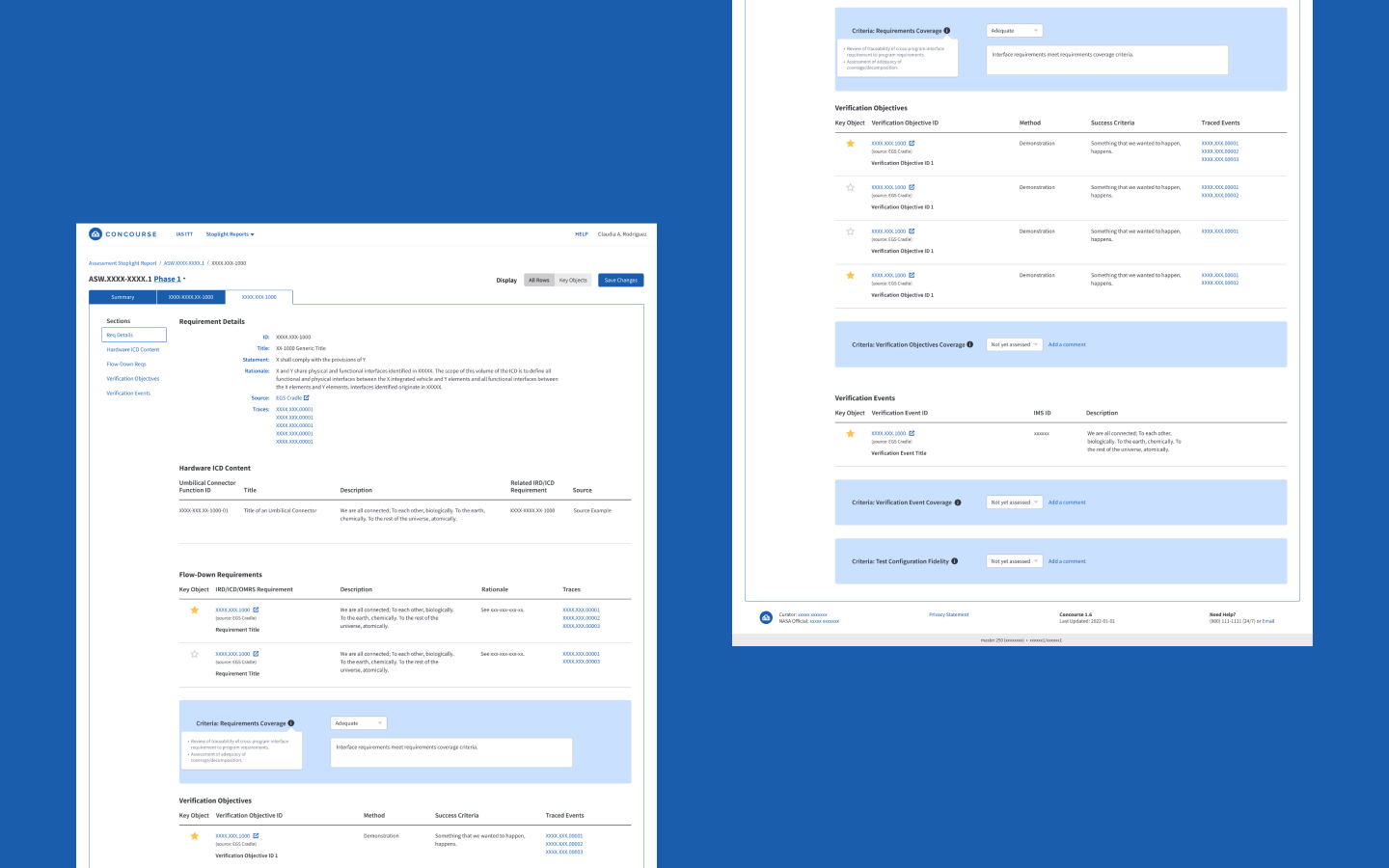

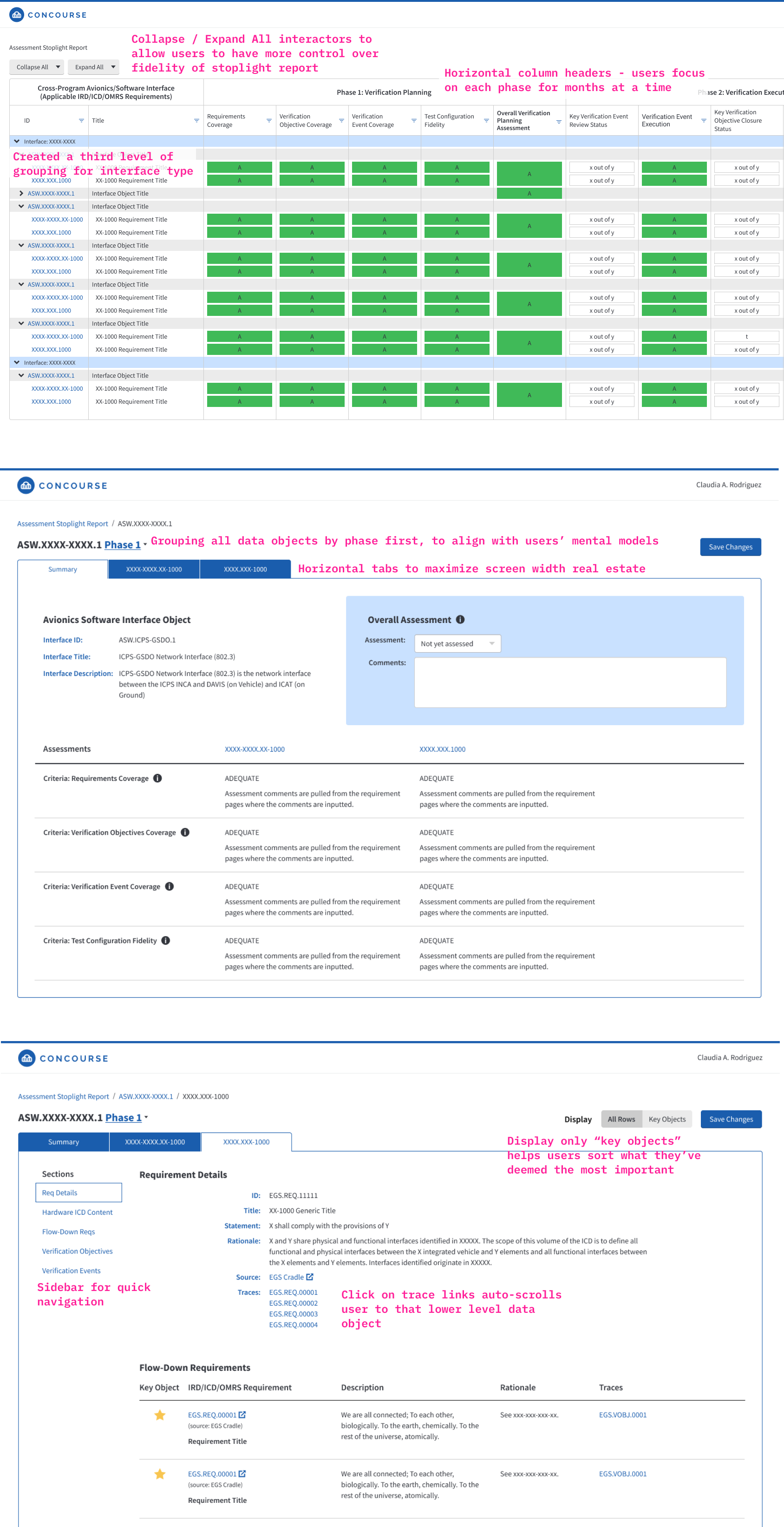

Concourse uses progressive disclosure to display data to users of different roles across 3 different levels of fidelity

02

Concourse aggregates relevant data objects on a single page and links them together, allowing users to quickly follow the trail

03

Concourse allows engineers to enter assessments of the data that roll up to higher level views

research & discovery

We started off with conducting interviews with systems engineers, where we uncovered their existing workflow, pain points, and what was needed to have feature parity in the first major deployment.

Engineers were frustrated by the amount of time they spent aggregating and maintaining data in Excel.

Being able to follow the traceability between data objects is crucial to the assessment process.

In a dynamic and complex data environment, engineers are always looking to confirm data freshness and integrity.

Design process

As we began our iterative design process, there were a number of considerations we wanted to keep in mind.

01

What are opportunities to automate so our users can spend more time doing actual data analysis?

02

How can we reduce cognitive load by providing the right fidelity of data at the right time and place?

03

How might we give our users confidence in the tool regarding data freshness?

We iterated from low- to hi-fidelity prototypes, involving stakeholders as co-designers by having them create their own mockups.The final hi-fi clickable prototype was tested using think-aloud usability sessions focused on key tasks to uncover issues in critical flows.

Outcome

This new NASA web platform was deployed during the pandemic in 2020 and since then, has continued to scale to multiple teams across the agency. We've been collecting feedback and iterating the design since.

“Before Concourse, maintaining data integrity was unsustainable — time-consuming and error-prone — and sharing up-to-date results with our technical community was difficult.

Now, Concourse performs the data integration, facilitates our assessment process, and publishes our results through a well-designed web interface.

Our team can focus on core work, and results are available on-demand to anyone in our community.”

🏆 2021, 2025 NASA Honor Award winner

Since 1.0 deployment, we’ve expanded the Concourse application into a platform that now supports 5 different NASA engineering teams across multiple centers.

We initially invested significant resources into building a custom filtering feature, only to learn through research and usage data that it was rarely used. In hindsight, an incremental rollout—starting with basic filtering capabilities—would have better matched user needs and helped us avoid over-engineering.

Stakeholder input proved crucial to the final design, but we realized we could have saved significant time and reduced rework by engaging them earlier as co-designers.